AI agents that run inside Microsoft Copilot Studio can be abused to harvest OAuth tokens — a tactic Datadog researchers have documented that tricks users into granting permissions to malicious agents. This post summarizes how the attack works, who is at risk, and practical steps to reduce exposure.

What happened — a quick summary

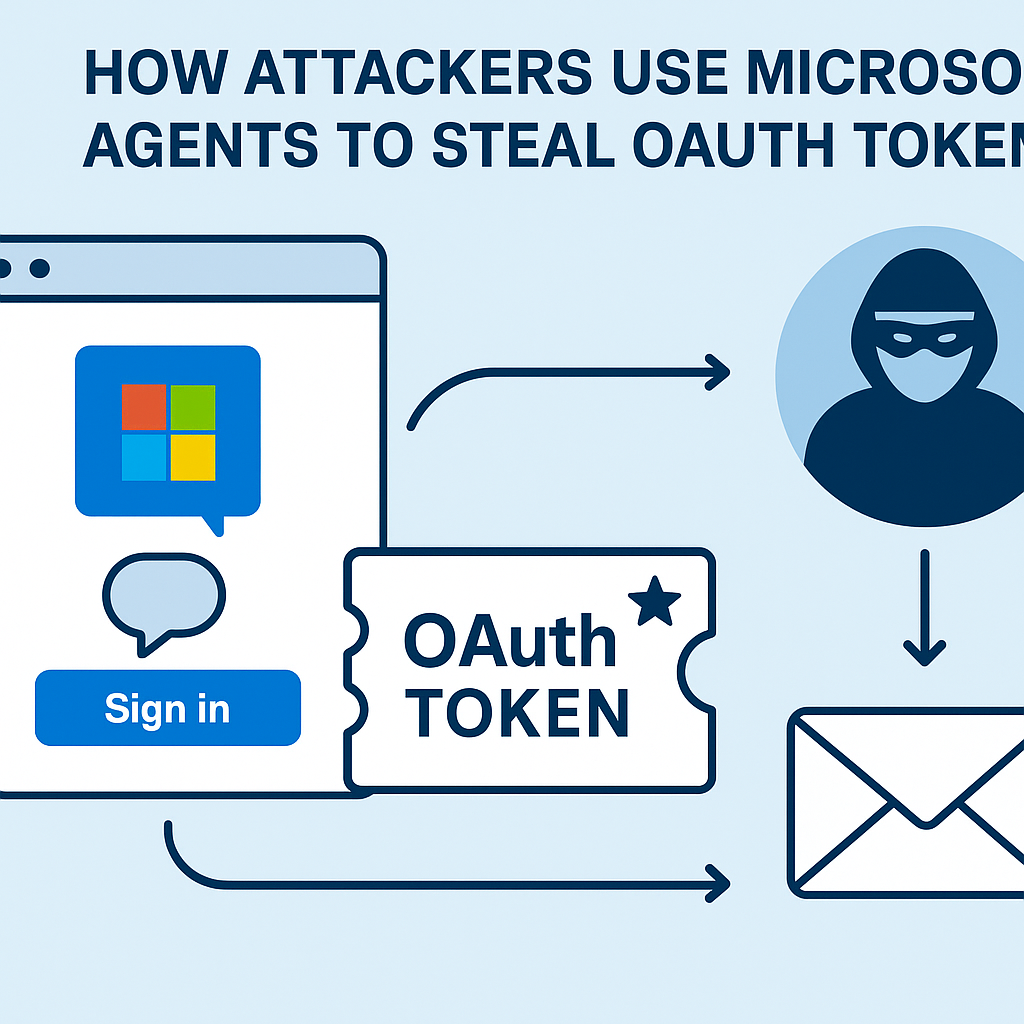

Researchers observed that attackers can create or share Copilot Studio “topics” — agent workflows that look and behave like normal web pages — which prompt users to sign in and inadvertently grant OAuth permissions. Those tokens let attackers perform actions on behalf of the user, such as sending email or modifying calendar entries, effectively turning trusted identities into attack vectors.

How the attack works (in plain terms)

The abuse hinges on three pieces:

- Agent-as-page interface: The malicious agent is presented as a web-like page with a chat interface that asks the user to log in — this lowers suspicion.

- OAuth consent: When the user authenticates, the agent requests OAuth permissions. If granted, the agent receives tokens tied to that user account.

- Token misuse: With OAuth tokens, the attacker can perform actions (email, calendar updates, etc.) using the victim’s privileges without needing their password.

Why this is especially tricky

Because the agent pages and consent flows can look like legitimate Microsoft pages and because Copilot Studio workflows are shareable, victims may not realize they are authorizing a malicious application. Datadog refers to this method as “CoPhish.”

Who is most at risk

Organizations that allow users to create or run Copilot Studio agents in their own Entra ID tenant are exposed, especially if administrators can approve application permissions without additional verification. Unprivileged users can still cause damage by granting tokens; administrators can make the problem worse by pre-approving permissions for apps within their tenant.

Immediate mitigation steps

Technical teams can take several practical steps to reduce risk quickly:

- Restrict who can create agents: Limit Copilot Studio agent creation to a small number of trusted accounts or disable self-service creation in Entra where possible.

- Harden app consent policies: Require admin consent for permissions that allow write access to mail, calendars, and files. Review and tighten tenant consent settings.

- Monitor OAuth approvals: Audit recent app consent events and revoke suspicious permissions or tokens promptly.

- User education: Train users to recognize unusual consent requests and report unexpected sign-in prompts immediately.

Longer-term controls and best practices

Beyond immediate mitigations, consider implementing conditional access policies that limit what tokens can do, using least privilege for application permissions, and integrating identity threat detection tools that flag anomalous OAuth usage. Regularly review external app approvals and ensure unverified apps cannot be granted broad permissions by default.

Conclusion

Agent-based workflows bring productivity gains, but they also expand the attack surface. Treat Copilot Studio agents and any shared workflows as potential supply-chain-like risks: enforce strict creation and consent policies, keep an audit trail, and prepare a rapid response process for revoking tokens and informing affected users.